I’m a Mechanical Engineer from Brazil with a Master’s in Economics & Public Policy from UCLA (full scholarship from the Lemann Foundation). In 2021, I paused a prestigious PhD in Economics in the Netherlands to care for my family during the pandemic - a moment that also marked my transition into data full-time.

Since then, I’ve been working remotely as a Data & Analytics Engineer, building end-to-end data platforms with dbt, Snowflake, Redshift, SQL, Python, and AWS for global clients across the US, UK, Spain, and Brazil - in industries such as airlines, sports, e-commerce, and IoT. My focus is on designing scalable data architectures, improving data quality, and enabling AI-driven analytics.

Earlier in my career, I helped build Stone Payments (NASDAQ: STNE) as its first employee and founded MePrepara, an online math prep platform with 140+ video lessons that helped low-income Brazilian students prepare for GRE and GMAT exams.

Throughout my journey, I’ve been recognized with awards and scholarships from UCLA, Yale University, Lemann Foundation, the General Electric Foundation, and The Club of Rome.

I bring a mix of engineering, economics, and business to the table, along with an enduring belief that education and technology can expand opportunity everywhere.

Projects

- Data & Analytics Engineering

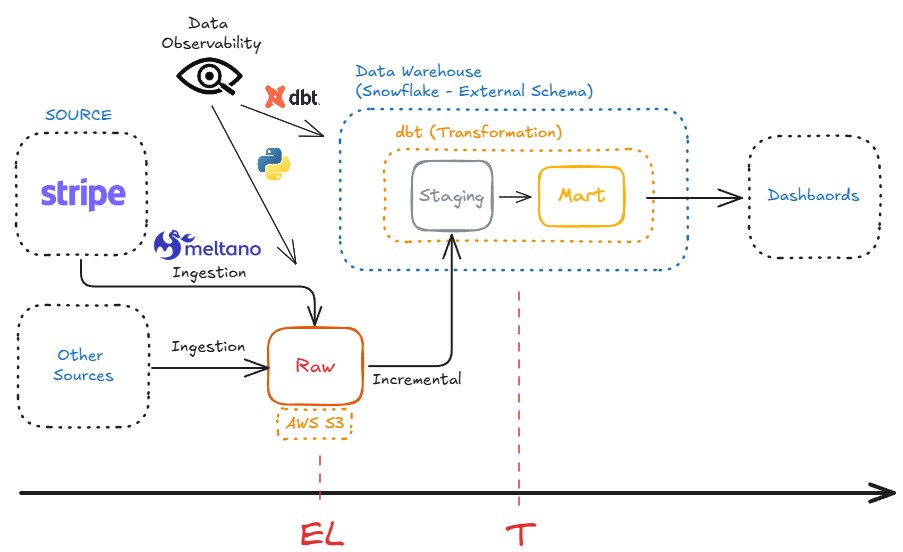

- Tech Stack: SQL, Python, Meltano, dbt, Snowflake, AWS, Github Actions, CI-CD

- Snowflake Architecture, Stored Procedures, Stream, and Tasks - Fundamentals

- Data Observability for Raw Stripe Data in S3 with CI-CD

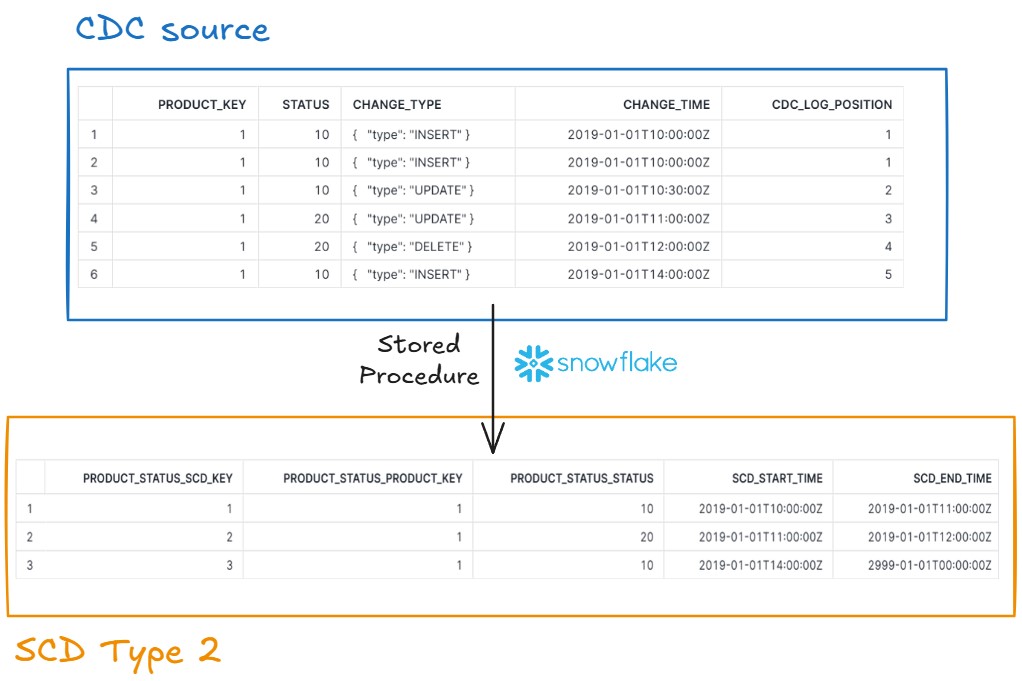

- Implementing CDC with SCD Techniques: CDC Source -> SCD Type 2

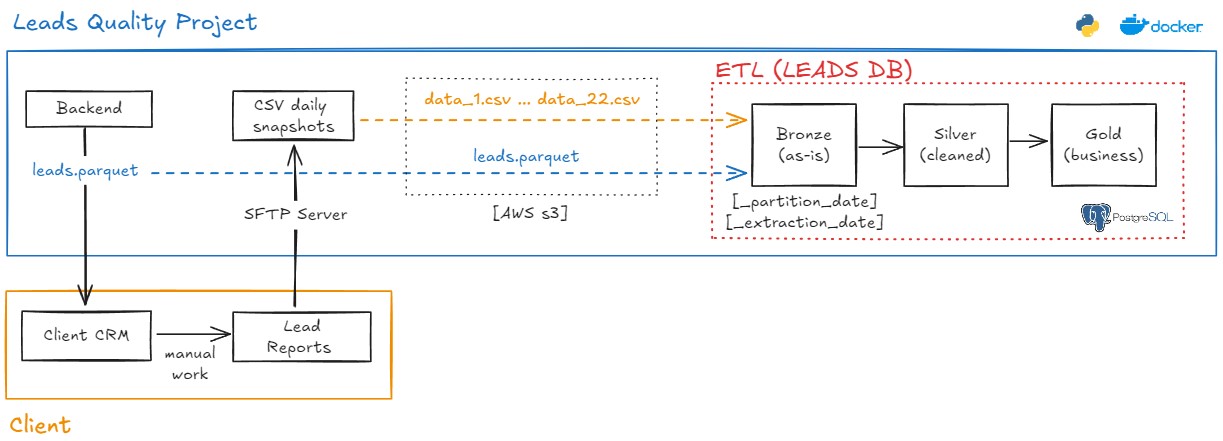

- Lead Quality Process: AWS S3 Bucket (Parquet, CSVs) -> Postgres

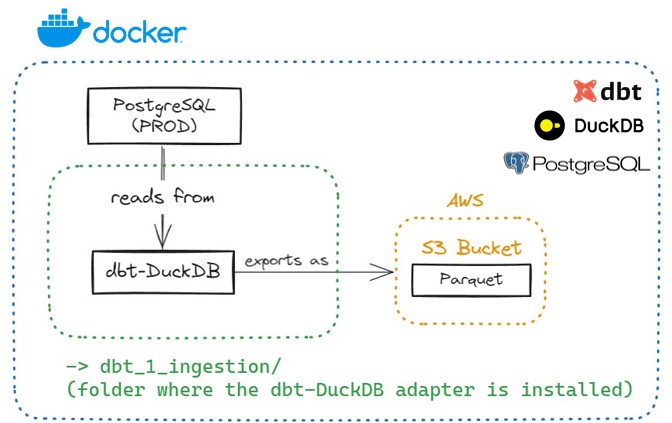

- Part 1 - Ingestion - dbt-DuckDB: Postgres -> AWS S3 Bucket (Parquet)

- Part 2 - Transformation - dbt-Snowflake: AWS S3 Bucket (Parquet) -> Snowflake External Tables

- ETL for Machine Learning (Churn Prediction)

- Migrating ETL to dbt

- ETL from Crypto API to Tableau

- Applied Data Science and Machine Learning

- Tech Stack: Python (Pandas, Numpy, Statsmodels, Scikit-Learn, CausalInference)

- Causal Inference: Effect of a New Recommendation System

- Causal Inference: Effect of a Customer-Satisfaction Program

- Data Analytics with Python (Best Practices)

- [Teaching] Advanced Mathematics, Statistics, and Machine Learning

- Tech Stack: Jekyll, Markdown, LaTeX, GitHub Pages & Quarto

- Foundations of Data Science & Causal Machine Learning: A Mathematical Journey

See all projects below!

Data & Analytics Engineering

Snowflake Architecture, Stored Procedures, Stream, and Tasks - Fundamentals

This guide covers the four essential pillars of Snowflake mastery:

- Snowflake Architecture & Performance Fundamentals

- Procedural SQL, Streams & Tasks

- AWS S3 Integration & Data Loading

- Orchestration & ELT Design

Data Observability for Raw Stripe Data in S3 with CI-CD

This project provides a lightweight observability layer for raw Stripe data landing in S3 from Meltano ingestion. The goal is to give immediate confidence in the raw layer before any downstream transformations or analytics.

Key features:

- File presence and content validation: Ensures that each expected CSV exists and is not empty for all configured Stripe streams (charges, events, customers, refunds, etc.).

- Schema validation: Flattens nested JSON headers and checks required fields to detect schema drift early.

- Single entrypoint:

run_checks.shorchestrates all checks and provides immediate feedback on failures. - Configurable environment: Uses a

.envfile for credentials and configuration. - AWS integration: Uses

boto3to interact with S3 securely and efficiently. - Supports CI/CD: Integrates with GitHub Actions workflows to run automatically or on-demand, ensuring downstream transformations are built on a trusted raw layer.

Implementing an SCD Type 2 dimension from a CDC source using Snowflakes’s Stored procedure and Data Quality Checks.

This task involves implementing a Slowly Changing Dimension (SCD) Type 2 to track changes to a product’s status over time within Snowflake. The source for this dimension is a Change Data Capture (CDC) stream that logs all data modification events (DML operations) from a transactional system. The main goal is to maintain historical records of product status changes, based on an ordered and deduplicated stream of changes assuring idempotency and with basic data quality checks.

Lead Quality Process (Reading Parquet and CSVs from S3 -> Transforming with Object-Oriented Design -> Postgres (Bronze, Silver, Gold Layers)).

This projects uses a Dockerized environment to extract data both Parquet and CSV Data from S3 Buckets to Load and Transform them in PostgreSQL, following the Medallion Architecture.

Part 1 of 2 - Leveraging dbt-DuckDB to perform an Ingestion Step (Postgres -> AWS S3 Bucket (Parquet)).

This projects uses a Dockerized environment to extract data from Postgres (as if it were data in “Production”). Then, it converts the data into a Parquet files, saving them into AWS S3 Bucket. I used my AWS Free Tier account and implemented the dbt-DuckDB adapter to expand dbt’s core function (the Transformation step) into an Ingestion machine.

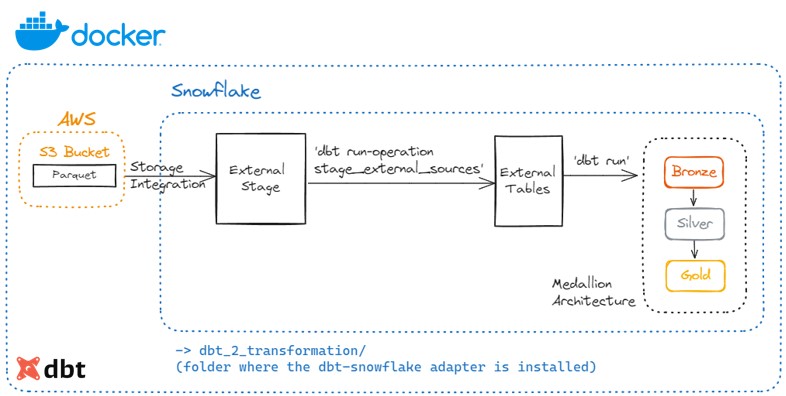

Part 2 of 2 - Leveraging dbt-Snowflake to perform a Transformation Step (Parquet in S3 -> Snowflake External Tables -> Transformation in Snowflake via dbt).

This project uses a Dockerized environment to extract Parquet files stored in S3 Buckets. External Tables were In Snowflake following Snowflake’s Storage Integration and External Stage procedures. Then, dbt perfors the Transformation step and materialize dimension and facts in the Silver Layer and Aggregated tables in the Gold schema, following the Medallion Architecture and Kimbal’s Dimensional Modeling.

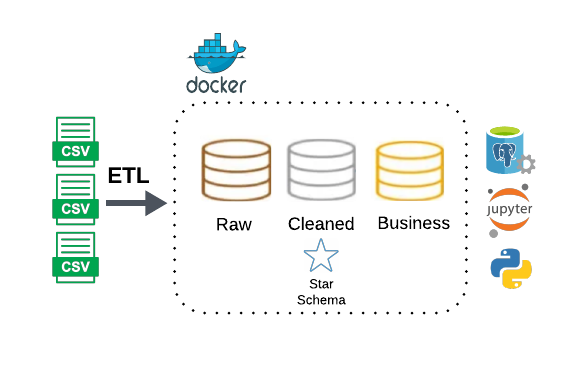

ETL (Medallion Architecture and Kimball Dimensional Modeling) for Machine Learning (Churn Prediction), with Dockerized Postgres, Jupyter Notebook, and Python.

I built a Python ETL pipeline using Python functions to perform ETL steps. This project runs within a Dockerized environment, using PostgreSQL as a database and Jupyter Notebook as a quick way to interact with the data and materialize schemas and tables. The ETL process followed the Medallion Architecture (bronze, silver, and gold schemas) and Kimbal’s Dimensional Modeling (Star Schema).

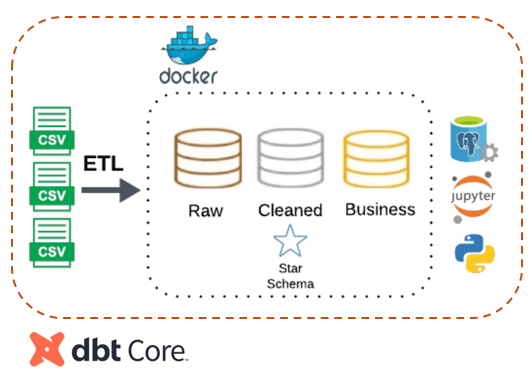

Migrating ETL (Medallion Architecture and Kimball Dimensional Modeling) to dbt, with Dockerized Postgres, Jupyter Notebook, and dbt.

I expanded a previous work to mimic a project where we want to migrate Python ETL Processes to dbt, within a Dockerized environment. The data is extracted from multiple CSV files and both the Transformation and Loading steps are done against PostgreSQL, via dbt. The ETL process followed the Medallion Architecture (bronze, silver, and gold schemas) and Kimbal’s Dimensional Modeling (Star Schema).

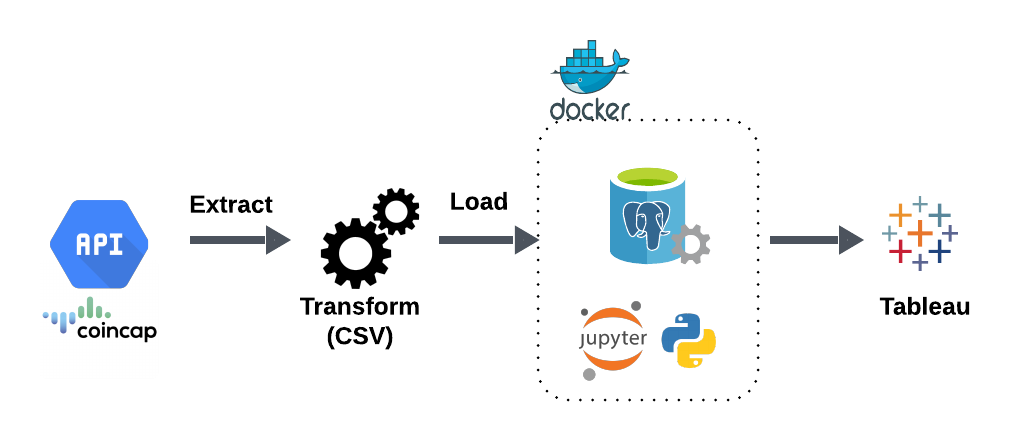

ETL Pipeline from Crypto API to Tableau (CSV), with Dockerized Postgres, Jupyter Notebook, and Python.

This ETL pipeline uses Python functions to perform ETL steps, extracting from an external API and transforming the data to be saved as CSV files for later use by Tableau or any other visualization tool. This project runs within a Dockerized environment, using PostgreSQL as a database and Jupyter Notebook as a quick way to interact with the data.

Applied Data Science and Machine Learning

Causal Inference (Propensity Score Matching & Difference-in-Differences): Measuring the Effect of a New Recommendation System on an E-Commerce Marketplace

Causal Inference (Difference-in-Differences): Measuring the Effect of a New Customer-Satisfaction Program on an Airline Company

Data Analytics with Python (Best Practices)

Data Cleaning - Preparing Categorical Data for Modeling

When datasets are large, it can take forever for a Machine Learning model to make predictions. We want to make sure that data is stored efficiently without having to change the size of the dataset.

Data Cleaning - Parsing Date and Time Zone for Modeling

Best Practices when cleaning dates, time, and time zone.

Data Analysis and Inferential Statistics with Python

Advanced Mathematics, Statistics, and Machine Learning

I have a strong passion for teaching and educating others. A personal characteristic I am proud of is the ability to transform very complex subjects into intuitive topics for any audience.

I find happiness in the little things in life and I also learned a lot from every mistake I have made so far (and still do).

As hobbies, I play football competitively (forward), it’s my passion. I have played in amateur leagues in Brazil, USA, and the Netherlands.

Foundations of Data Science & Causal Machine Learning: A Mathematical Journey

I have a passion for teaching and for building bridges between mathematics, statistics, and real-world data problems. Over the years, I have been trained by inspiring professors in top universities around the globe, and I want to share that journey in an open and accessible way.

Currently, I’m working on a long-term project:

Foundations of Data Science & Causal Machine Learning: A Mathematical Journey.

This is an open study-book (and future course) where I document my path to mastering the mathematical foundations behind Data Science, Econometrics, and Causal Machine Learning.

The project is structured in phases, each one building on the previous:

- Phase 1 – Logic & Set Theory: the language of mathematics, proof techniques, quantifiers, families of sets.

- Phase 2 – Real Analysis: rigorous calculus, convergence, continuity, differentiation, integration.

- Phase 3 – Linear Algebra: vector spaces, eigenvalues, diagonalization, matrix decompositions.

- Phase 4 – Functional Analysis & Hilbert Spaces: normed spaces, orthogonality, projections, reproducing kernel Hilbert spaces (RKHS).

- Phase 5 – Topology & Measure Theory: open/closed sets, compactness, σ-algebras, Lebesgue measure and integration.

- Phase 6 – Probability: Kolmogorov’s axioms, random variables, convergence, laws of large numbers, central limit theorem.

- Phase 7 – Mathematical Statistics: estimation, properties of estimators, hypothesis testing, asymptotics.

- Phase 8 – Causality: structural causal models (Pearl), potential outcomes (Rubin), invariant prediction (Peters, Schölkopf), and modern Causal Machine Learning (Chernozhukov, Muandet).

Throughout the journey, I aim to combine:

- Rigor (formal proofs and measure-theoretic grounding),

- Intuition (clear explanations and examples), and

- Applications (connections to ML models, econometrics, and real-world data problems).

The book is freely available online in English: